The database company Couchbase has added vector search to Couchbase Capella and Couchbase Server.

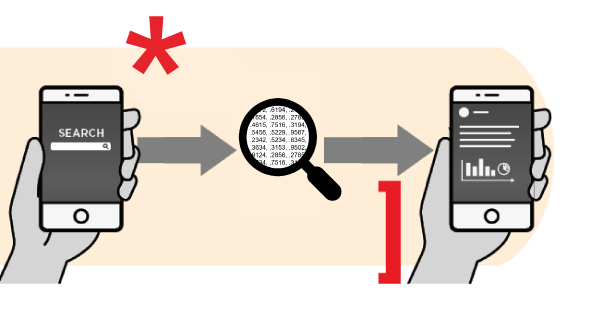

According to the company, vector search allows similar objects to be discovered in a search query, even if they are not a direct match, as it returns “nearest-neighbor results.”

Vector search also supports text, images, audio, and video by first converting them to mathematical representations. This makes it well suited to AI applications that may be utilizing all of those formats.

Couchbase believes that semantic search that is powered by vector search and assisted by retrieval-augmented generation will help reduce hallucinations and improve response accuracy in AI applications.

By adding vector search to its database platform, Couchbase believes it will help support customers who are creating personalized AI-powered applications.

“Couchbase is seizing this moment, bringing together vector search and real-time data analysis on the same platform,” said Scott Anderson, SVP of product management and business operations at Couchbase. “Our approach provides customers a safe, fast and simplified database architecture that’s multipurpose, real time and ready for AI.”

In addition, the company also announced integrations with LangChain and LlamaIndex. LangChain provides a common API interface for interacting with LLMs, while LlamaIndex provides a range of choices for LLMs.

“Retrieval has become the predominant way to combine data with LLMs,” said Harrison Chase, CEO and co-founder of LangChain. “Many LLM-driven applications demand user-specific data beyond the model’s training dataset, relying on robust databases to feed in supplementary data and context from different sources. Our integration with Couchbase provides customers another powerful database option for vector store so they can more easily build AI applications.”